Crawlability is a fundamental concept in the world of search engine optimisation (SEO) that refers to the ease with which search engines can access and traverse the content of a website. As a webmaster and technical SEO specialist, I understand that ensuring a website is crawlable involves creating a clear and efficient path for search engine bots to follow. This includes strategies such as having a logical website structure, a well-crafted sitemap, and ensuring that important content isn’t hidden behind login forms or impenetrable JavaScript.

The importance of crawlability lies in the fact that it’s the first step to having a website’s content indexed and subsequently ranked in search engine results. If a site’s pages are not easily discoverable by search engines like Google, they might as well be invisible to people searching online. Through understanding and resolving common crawlability issues, such as broken links or incorrect use of robots.txt files, I aim to make websites more accessible to search engines, thereby increasing their chances of ranking well and reaching their target audience.

By employing the right tools and practices, I routinely check and enhance the crawlability of websites. This could involve rectifying incorrect implementations of redirect directives or eliminating duplicate content issues that could confound search engine bots. The goal is to streamline the process by which search engine crawlers, also referred to as spiders or bots, navigate a website’s pages to ensure that valuable content is indexed correctly and efficiently.

Crawlability refers to the ability of search engine bots to access and navigate a website’s content. To understand my website’s presence on search engines, I consider crawlability as the primary step that search engine bots such as Googlebot take to discover and read my pages. This process is foundational, as it determines if my content can be indexed and hence, become a candidate for ranking.

For me, ensuring my website is crawlable is a top priority because it directly impacts my SEO efforts. If my website has high crawlability, it means that search engine bots can easily access all the pages, understand their content, and thus, are more likely to include them in the search engine’s index. This is a prerequisite before my website can rank in search results, highlighting why crawlability is essential for SEO success.

In the realm of search engines, crawlers are the backbone that drives the discovery of new and updated content across the internet. Let’s explore how they operate meticulously and their behavioural patterns.

Crawlers, also known as bots or spiders, are automated scripts developed by search engines like Google to traverse the internet. The crawlers are systematically browse content by following links from one webpage to another. This activity creates a map, known as an index, that search engines use to retrieve information quickly when a user performs a search.

Crawlers adhere to specific behavioural protocols, which govern how and when they visit websites. These behaviours are influenced by a set of rules defined by the website owners in a file named robots.txt. They carefully examine this file to understand which pages are allowed to visit and index. Additionally, each crawler has a user-agent string that identifies it to websites, allowing webmasters to tailor the response accordingly.

Googlebot is perhaps the most widely acknowledged crawler, performing the crucial task of collecting information for Google’s search engine index. However, it’s not the only one—other search engines like Bing have their own versions like Bingbot.

By understanding how crawlers work, webmasters can optimise their sites for better visibility and indexing by search engines, enhancing their presence on the internet.

In managing a website, I know that sitemaps and robots.txt files are crucial for directing how search engines interact with the site. Properly optimising these files can significantly enhance a site’s visibility and indexability.

XML sitemaps serve as a roadmap, allowing search engine crawlers to quickly discover all relevant URLs on my site. I keep a few best practices in mind when optimising my XML sitemap:

By following these steps, I make sure search engines can find and understand all of the content I intend for users to discover.

The robots.txt file is a powerful tool that helps me manage and control the way search engine crawlers scan my site. Here are some specific strategies I use:

User-agent: Googlebot Disallow: /private/ Allow: /public/ Sitemap: https://www.example.com/sitemap.xmlBy crafting a precise robots.txt file, I effectively guide search engines through what to crawl and what to skip, which optimises my site’s crawl budget and keeps my most important pages in the spotlight.

Crawlability doesn’t just hinge on the content I produce; the structure of my website plays a crucial role in how easily search engines can navigate and interpret the site.

When I consider my site architecture, I’m looking at the blueprint of my website which informs how the navigation menu and all the pages are organised. A logical and straightforward architecture ensures that search engines can crawl my site more effectively. For example, I ensure that my important pages are no more than a few clicks away from the home page, which enhances the discoverability of these pages.

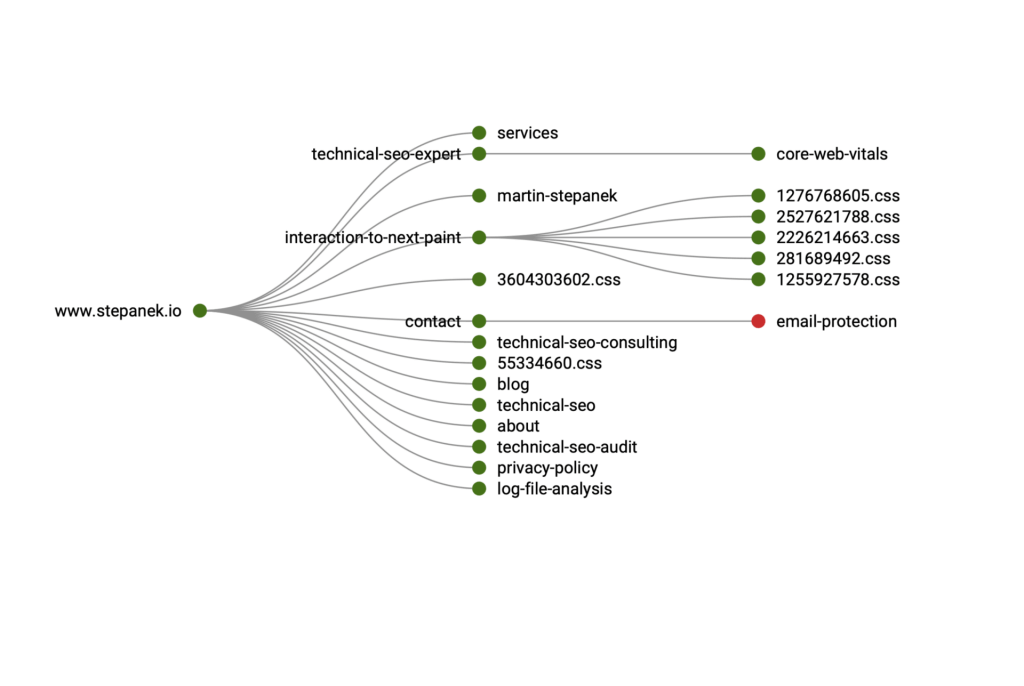

A clear hierarchy not only aids in providing a better user experience but it also makes it easier for search engines to crawl my website. I organise my content in a tiered layout with the most important categories at the top of the hierarchy. This structure mimics an upside-down tree with the home page at the base, branching out to various sections and sub-sections. The clarity of my site structure directly impacts how effectively a search engine indexes my site, which in turn affects my online visibility.

Internal links and navigational cues significantly enhance my website’s crawlability and user experience. They play a pivotal role in how both users and search engines navigate through my content.

To optimise my internal linking strategies, I meticulously analyse the link distribution within my site. I ensure that my most authoritative pages distribute link equity effectively to other relevant pages. Using descriptive anchor text is also crucial, as it helps search engines understand the context of linked pages. I audit my internal links regularly to identify and fix any issues, maintaining a robust internal link structure that supports both usability and SEO.

Breadcrumb navigation offers a clear path for users to trace back to higher-level categories within my site. It improves user experience by showing where users are in the site structure at any given moment. For search engines, breadcrumb links bolster the thematic relevancy of my pages and support a better understanding of my site’s architecture.

By embedding efficient internal linking and intuitive breadcrumb navigation, I ensure my website is user-friendly and search engine ready, leveraging these tools for improved discoverability and indexing.

In my approach to technical SEO, I focus on ensuring websites are not just visible but also performing at their peak. This requires a detailed examination of the site’s infrastructure.

It’s fundamental that a site adapts smoothly to various devices, which is why I prioritise responsive design. My audits look for flexible layouts, images, and CSS media queries to ensure content is accessible and legible on all devices. Page loading speed is another critical factor; a swift loading site is essential for retaining users and improving search rankings. I employ several strategies, including compressing images, minifying CSS and JavaScript, and leveraging browser caching, all with the aim of enhancing the performance and user experience of the site.

Strategies for improving page loading speed:

Within the realm of technical SEO, I also dedicate my efforts to resolving issues of duplicate content. This is where canonical tags (rel=canonical) come into play. I utilise these HTML elements to indicate the preferred version of a web page, which is crucial in telling search engines which content is original and to be indexed. By effectively implementing canonical tags, I safeguard the site from potential search engine penalties and ensure that link equity is directed to the correct URLs.

Key uses for canonical tags:

By addressing these areas, I labour to lay a solid foundation for any website’s online presence, driving both traffic and engagement.

Search engines deploy algorithms that significantly influence crawlability. Essentially, these algorithms determine which sites are accessible for crawling by search engine bots. If my site adheres to the algorithm’s criteria, such as having clear navigation and an easily interpretable structure, it stands a better chance to be crawled effectively. For instance, a robust internal linking structure allows bots to discover and index content more efficiently, facilitating improved crawlability.

Once my website content is crawled and indexed, the algorithm evaluates a variety of factors to ascertain its rankings. These factors include but aren’t limited to keywords, site speed, mobile optimisation, and user engagement. High visibility in search results is contingent upon how favourably the algorithm assesses these factors in comparison to competing content. Moreover, sites that excel across these metrics not only appear higher in the SERPs (Search Engine Results Pages) but also tend to be more visible to users, thus attracting more traffic.

To ensure my website remains discoverable and indexable by search engines, I regularly engage in monitoring and improving its crawlability. This involves leveraging specific tools and practices that allow me to identify and address potential issues that could impede a search engine’s ability to crawl and understand my site’s content.

One of the primary tools I rely on is Google Search Console. It’s an invaluable resource for webmasters to monitor their site’s presence in Google search results. The Search Console provides detailed reports on how my website is performing, including insights into potential crawl errors that may be preventing pages from being indexed effectively.

For a comprehensive site audit and crawl error identification, I often turn to tools like Screaming Frog. This software enables me to emulate how a search engine crawls my website. It provides a clear, detailed map of my site’s structure, identifying broken links, redirects, and various issues that could affect crawlability. With such tools, I’m able to systematically review my website’s URLs, analyse the meta data, and ensure that search engines can crawl my site without encountering any obstacles.

To ensure that the pages on my website are indexed correctly by search engines, it’s crucial to understand and optimise server responses and status codes. The way my server communicates with search engines directly influences the indexability of my site’s content.

When a search engine crawler attempts to access a page on my site, the server responds with a status code. It’s paramount for me to ensure that my server always returns the correct code.

By closely monitoring server responses and ensuring that the correct status codes are consistently returned, I can significantly enhance the indexability of my pages. This process ensures that my content can be indexed and discovered through search engine queries.

I’m going to share some insights into the more sophisticated tactics you can employ to refine your website’s crawlability. Effective management of redirects and broken links, as well as a keen understanding of crawl budget optimisation, are central to these practices.

Redirects are a normal part of a website’s evolution, but I ensure they’re implemented strategically to maintain link equity and user experience. It’s critical to use 301 redirects for permanently moved content, as this passes most of the original page’s ranking power to the new location. On the other hand, broken links, including dreaded 404 errors, detrimentally impact user experience and consume valuable crawl budget. It’s my job to routinely audit my site using tools like Screaming Frog or Google Search Console to identify and fix broken links, thus preserving the site’s SEO integrity.

When I look at crawl budget, it’s about maximising the efficiency with which search engines crawl my site. Each site is allocated a certain ‘budget’ by search engines; this is essentially the number of pages a search engine will crawl in a given timeframe. I optimise my crawl budget by ensuring that my site hierarchy is logical and that high-value pages are crawled more frequently than those of lesser importance. I also make sure to avoid duplicate content, as it can waste crawl budget, and improve page load times, which can encourage search engines to crawl my site more often.

I analyse my website's crawlability by using tools like Google Search Console and Screaming Frog which show the crawl rate and any crawl errors. It's essential for me to ensure that search engines can access my content without issues.

I improve crawlability by streamlining site structure, increasing page load speed, and ensuring that I have a comprehensive sitemap. Proper use of robots.txt also aids in directing crawler access effectively.

Crawlability pertains to a crawler's ability to navigate through a website and access its pages, whereas indexability goes a step further, determining whether a crawled page can be added to a search engine's index, making it eligible to appear in search results.

To optimise my website's crawlability, I focus on creating a clear hierarchy, using internal linking wisely, and avoiding duplicate content. It's vital that I also fix broken links which can hinder crawlers from navigating the site.

Search engines employ bots to follow links and discover pages. They assess the structure, content accessibility, and any directives provided in robots.txt to ascertain a website's crawlability.

Yes, the ability of search engines to crawl my website can directly affect its rankings. If they cannot crawl the site effectively, this may limit the visibility of my content in search results, thereby impacting its search performance.

Our website uses cookies. By continuing we assume your permission to deploy cookies as detailed in our privacy policy.