Indexability is fundamental to my efforts in search engine optimisation (SEO). As a technical SEO specialist, I understand that for any webpage to appear in search results, it must first be accessible to a search engine like Google.

Indexability refers to a search engine’s ability to analyse and add a webpage to its index, which is a database containing billions of pages. If my content isn’t indexable, it quite simply can’t gain traffic through search queries, which is critical for achieving visibility and improving rankings.

My approach to ensuring content is indexable involves a multifaceted strategy.

I monitor factors like whether my web pages return a 200 status code to confirm they are found, use clear and structured HTML to aid search engines in understanding my content, and ensure that my robots.txt file doesn’t inadvertently block crucial pages.

It’s about making my content not only accessible but also easy for search engines to understand and categorise.

This way, when relevant queries are made, my content has the best chance of being displayed prominently, hence increasing my site’s organic traffic.

Indexability directly influences how a webpage is discovered and ranked by search engines. If a page is indexable, it means that search engines such as Google can analyse its content and deem it suitable to appear in search results for relevant queries.

High indexability is vital for SEO as it enables webpages to be found by potential visitors, affecting visibility and traffic.

Search engines utilise complex algorithms and web crawlers, sometimes known as spiders, to find and index webpages.

These crawlers navigate the web, assessing pages for indexability. Factors that affect indexability include the use of meta tags, the presence of a robots.txt file, and whether the content is accessible without requiring user interaction.

Google bots, specifically, play a pivotal role in this process. They crawl webpages, following links to discover new content while re-evaluating already indexed pages for updates or changes.

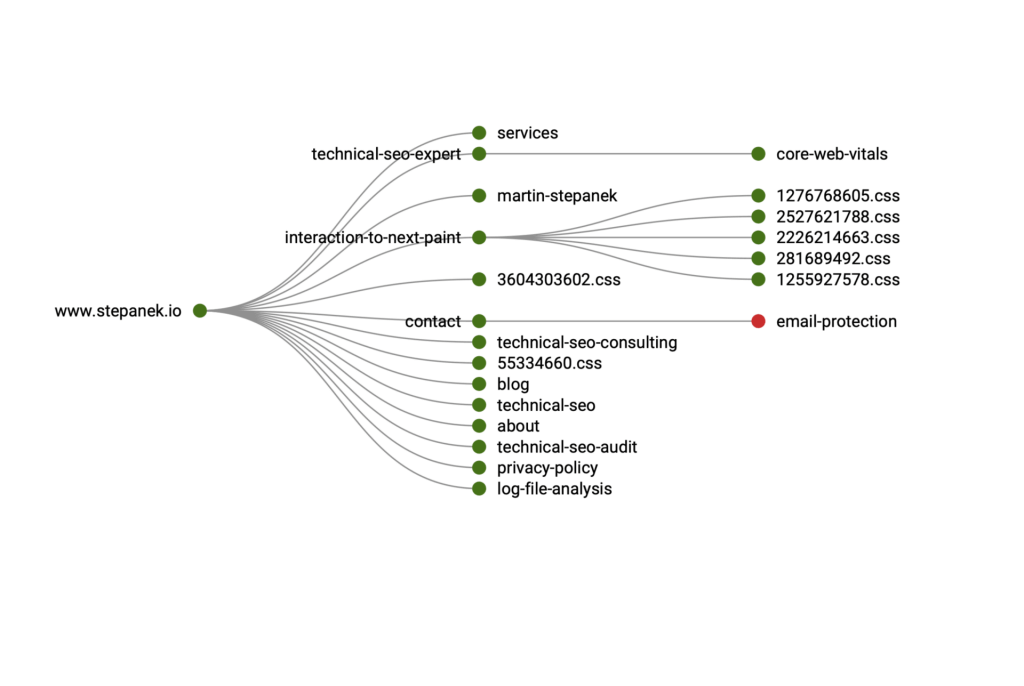

It’s essential that my website is structured in a way that is easily navigable by these bots, with a clean hierarchy and no broken links, ensuring all important content is indexed efficiently.

Optimising your site structure is a fundamental step to ensure that search engines can efficiently index your webpages.

Creating an XML sitemap is vital, as it acts like a roadmap, guiding search engines to all your important pages.

This file should list the URLs of your site along with additional metadata about each URL, such as when it was last updated and its importance relative to other URLs.

Submitting your XML sitemap through Google Search Console enhances the visibility of your webpages to the Google indexing process.

An effective internal linking strategy facilitates easier navigation for both users and search engine crawlers.

It’s crucial to ensure that every subpage is accessible through clear and logical hyperlinks.

My focus is on creating a hierarchy starting with the homepage, branching out to main categories, and flowing down to individual pages.

This method helps distribute page authority and ranking power throughout the site.

robots.txt is a text file located in the root directory of your site, and it’s instrumental in crawling control.

I use it to inform search engine bots which parts of the site should not be crawled and indexed.

This is crucial for preventing search engines from accessing duplicate content or private sections of my website. However, it’s important to note that robots.txt does not prevent other sites from linking to your content, which means that those pages might still be indexed without direct crawling.

When I approach the goal of enhancing content for better indexing by search engines, I focus on three key areas: eradicating duplicate content, ensuring the relevance of the content, and improving the content’s quality and visibility. These critical steps help in elevating the indexation of web pages.

I make it a priority to prevent duplicate content issues on my website as they can confuse search engines and dilute my page’s ranking power.

Canonical tags are crucial because they signal to search engines which version of a page is the “main” one and should be indexed; the rest are treated as duplicates.

I always ensure that each piece of content I publish is unique and that canonical tags are used correctly across my web pages.

To keep my content relevant, I continuously align it with the interest and search intent of my target audience.

I conduct thorough keyword research to identify what my audience is looking for and craft my content to address those needs directly.

Providing relevant content not only enhances user experience but also signals to search engines that my webpage is a valuable resource, thereby improving its indexability.

Improving the quality of my content involves several iterative processes.

I aim to provide well-researched, informative, and easy-to-read articles.

The use of headings, subheadings, bullet points, and tables helps to structure information and enhance readability.

Moreover, I optimise images and other media with alt tags to boost visibility, ensuring that search engines can interpret every element on the page, which in turn, enhances the page’s reach and indexability.

In developing a robust SEO strategy, I understand that a well-crafted link building framework is crucial to enhance a website’s indexability. This involves both acquiring quality backlinks and managing our site’s internal linking structure.

Backlinks, commonly known as inbound links, are a cornerstone of SEO success. They signal to search engines that others vouch for my content.

When multiple sites link to the same webpage, search engines can infer that the content is worth surfacing on a SERP.

Hence, a thoughtful approach to link building is fundamental.

I always ensure that backlinks are from reputable and relevant sites, as this not only improves my site’s trustworthiness but also its indexability by search engines.

Internal linking refers to the hyperlinks that connect one page of my website to another page within the same domain. These are crucial for several reasons:

To optimise for search engines, I follow these practices:

When I consider the influence of website performance on indexability, I focus on optimising page speed and addressing pervasive technical issues. These are critical to ensuring search engines can efficiently crawl and index my site.

I understand that the swiftness with which my web pages load is paramount. Search engines privilege fast-loading pages in their indexing process.

I utilise tools such as Google’s PageSpeed Insights to measure my page speed and implement suggested improvements.

This can include minimising the size of images, leveraging browser caching, and reducing server response times.

Implementing these changes enhances the crawlability and, subsequently, the indexability of my pages.

In my technical SEO audits, I identify any technical barriers that could prevent search engines from indexing my site.

This includes checking for redirect loops, which can confuse crawlers and waste crawl budget, and fixing any server errors that signal poor website health.

Ensuring clean, efficient coding and optimisation of scripts further aids in reducing load times and crawler confusion.

By systematically resolving these issues, I pave the way for better indexation of my content.

As search engines prioritise mobile responsiveness, it’s essential that I focus on optimising websites for mobile indexing and enhancing user experience on mobile devices.

Google’s move to mobile-first indexing signifies that the search engine predominantly uses the mobile version of the content for indexing and ranking.

This approach reflects users’ shift to mobile devices.

For my websites to perform well in search results, I must ensure they are configured properly for mobile-first indexing. This includes fast website loading and good responsive design.

To deliver an optimal user experience, I streamline navigation for mobile users by implementing a responsive design and touch-friendly interfaces. These strategies encompass:

This emphasis on user experience not only supports better performance in search engine results but also aligns with users’ expectations for fast, efficient, and enjoyable interactions on their devices.

By continuously adapting and improving these aspects, I can cater to users’ needs and stay ahead in the SEO landscape.

Google Search Console is a free tool offered by Google that’s essential for any SEO strategy. It allows me to monitor my website’s presence in Google search results, providing insights that are crucial for understanding how my pages are indexed and displayed.

I routinely use Google Search Console to:

In my experience, the cornerstone of ensuring a website’s content is fully accessible to search bots lies in conducting thorough technical SEO audits. This process pinpoints barriers hindering a website’s visibility in search engine results.

When I perform a technical SEO audit, my goal is to create a clear roadmap for improving indexability.

The audit involves several key tools and resources that assess various elements crucial to indexability.

Some steps I take during an audit:

noindex tags. To check if a webpage is indexable, I utilise tools like Google Search Console. These tools provide information on whether your page has been crawled and indexed.

Alternatively, I can inspect the robots.txt file and meta tags to ensure they are not inadvertently blocking search engines.

The main factors affecting indexability are the proper use of meta tags, the inclusion of quality content, the page's load time, and ensuring that the robots.txt file permits search engines to index the site.

These elements help guarantee that web crawlers can access and evaluate the content.

Crawlability refers to a search engine's ability to navigate and read a site. However, indexability is the capability of a page to be added to the search engine's index. For successful SEO, a site must be both crawlable and indexable.

Improving a website's indexability involves creating a clear site structure, employing SEO-friendly URLs, using sitemaps, and ensuring that content is accessible without requiring user interaction.

These steps make it easier for search engines to find and index my website.

The robots.txt file serves as instructions to search engine bots, indicating which parts of my website should or should not be crawled and potentially indexed.

It's crucial that this file is configured correctly to prevent unintentional blocking of important pages.

Our website uses cookies. By continuing we assume your permission to deploy cookies as detailed in our privacy policy.